Convolutional Neural Networks ! Now Computers can see

What is a Convolutional neural network?

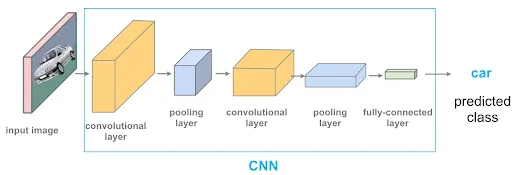

A Convolutional Neural Network (CNN) is a type of deep learning model that is inspired by the structure and function of the human brain’s visual cortex. It is primarily used in computer vision tasks such as image classification, object detection, and segmentation.

The CNN architecture involves multiple layers of interconnected neurons that are organized in a way to simulate how the brain processes information received from the eyes. CNNs are particularly useful for image recognition tasks, where the goal is to predict the content of an image, such as whether it contains a car or not.

Overall, CNNs have revolutionized computer vision and have enabled significant advances in a wide range of applications, from self-driving cars to medical imaging.

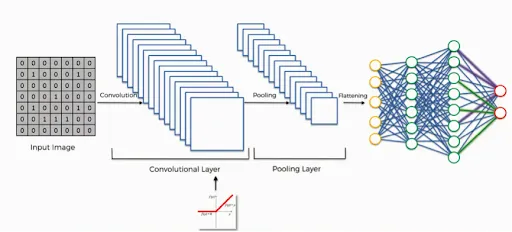

The image below illustrates the fundamental layers involved in predicting the content of an image, such as whether it contains a car or not. These layers will be discussed in detail in the following description.

Figure 1

But In order for the network to accurately predict whether a new picture contains a car or not, it must first be trained with thousands of images of cars. This process involves feeding the network a large dataset of car images, allowing it to learn the visual features that are common to cars. Once trained, the network can then make predictions on new images of cars with a high degree of accuracy.

Let’s understand each layer involved in predicting the above picture of the car

Convolutional Neural Network Layer

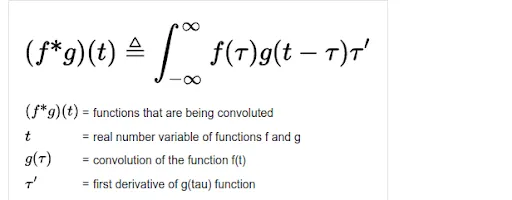

In simple terms, a convolution function is defined as follows:

However, in this tutorial, we will steer clear of delving too deeply into mathematics and instead focus on using intuition to gain an understanding of the essential concepts of a convolutional neural network.

If you are interested in maths then please check out:

Before proceeding further, it’s essential to understand how an image is represented on a computer.

- For a black-and-white image, a 2D array is created where each element of the array represents a pixel value of the image.

- But for a color image represented say in RGB format, a 3D array is created where each element of the array represents a pixel value, and the three dimensions represent the intensities of red, green, and blue colors that make up the image.

Now that we have a more clear understanding of how an image is represented in a computer we can proceed to understand the working of convolutional neural networks.

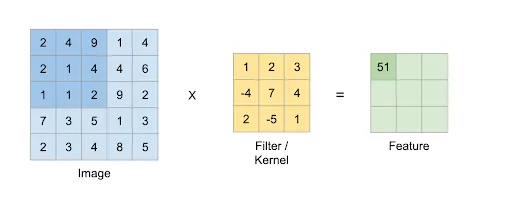

Let’s attempt to understand how the convolution process works from an intuitive perspective. Refer to the figure below

Figure 2

Now, Let’s break down the process of how a feature map or the output is generated in a convolutional neural network.

Firstly, the input image is represented as an array of pixel values a 2D array in case of a Black and white image, or a 3D array in case it’s a color RGB image.

Next, we need a feature detector also known as a filter or kernel which is applied to extract features from the image. This is accomplished by sliding the feature detector over the image and computing the dot product between the values of the feature detector and the corresponding values of the image.

To obtain the feature map or the final output, we take the feature detector and place it on the image (indicated by the dark blue square in the input image of Figure 2) and multiply each value in the feature detector with the corresponding values of the image. This process is repeated for each position of the feature detector on the image.

The resulting output is called a feature map or activation map/convolved feature map. The feature map highlights areas of the input image that are most similar to the features captured by the feature detector.

For eg, in Figure 2 we multiply each value of the image which is shaded with a dark blue square with the feature detector’s value corresponding value.

that is:

Corresponding Feature Map = 2*1+4*2+9*3–2*4+1*7+4*4+1*2–1*5+2*1=51

After creating the feature map using the convolution process, we then move the stride to the next position and repeat the same process. The filter is moved over the entire image to create a feature map for each position of the stride.

The stride refers to the step size at which we move the feature detector over the image, and its size is typically set to 2. This step is important for identifying features in the image and reducing its size while making it easier to process. Although we do lose some information, the feature values remain intact.

For example, when detecting a face, it’s essential to preserve the features of the face (such as the eyes, nose, and ears) and ignore other pixel values. To achieve this, we create a separate feature map for each feature and combine them to form the convolution layer.

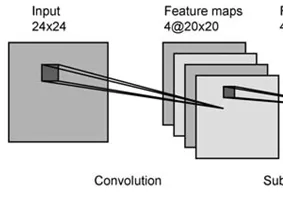

In summary, a convolution layer is created by generating a feature map for each feature (e.g., eyes, nose, ears) using the convolution process and combining them to form a single layer. So finally a convolution layer will look like below which may consist of many feature maps each representing a unique feature.

Figure 3

Applying the Relu Layer to Feature Maps

The next step in a convolutional neural network after obtaining the feature maps from the input image is to apply the Rectified Linear Unit (ReLU) activation function to the feature maps.

The purpose of using the ReLU function is to increase non-linearity or break up linearity in the derived feature maps. This is important because images are typically nonlinear in nature.

It’s worth noting that the use of ReLU is a mathematical concept that is employed to enhance the performance of convolutional neural networks.

But If you want to learn more about the technical details of ReLU, I recommend reading “Understanding Convolutional Neural Networks with A Mathematical Model” by C.C Jay Kuo.

https:arxiv.org/pdf/1609.04112.pdf

Applying the Pooling (Down Sampling Layer)

In computer vision, the ultimate goal is to develop a neural network that can accurately recognize images, regardless of how they are presented to the input layer.

For example, the network should be able to identify a face even if it is presented in a different orientation or lighting conditions, or even if it is a completely different face with distinct features.

To achieve this, we need to ensure that the neural network has a property called spatial variance. Essentially, this means that the network does not need to know where the picture is in the image or how it is positioned. Instead, it should be able to recognize features in the image even if they are distorted or shifted.

This is where pooling comes in. Pooling is a process of reducing the dimensionality of the feature maps by combining the outputs of neighboring neurons into a single neuron in the next layer. There are several types of pooling techniques, such as mean pooling and max pooling.

But the most popular technique is max pooling, where the maximum value within a region is selected as the output of the pooling operation. This technique helps to preserve the most important features while reducing the dimensionality of the feature maps.

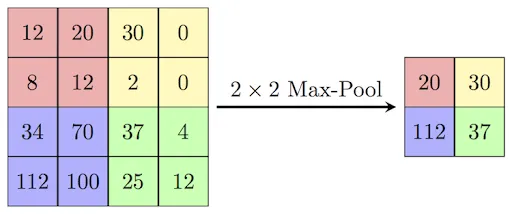

Let’s see how max-pooling works in more detail

Max pooling

Max pooling involves taking the maximum value from each of the strides or boxes, as illustrated in the image below

Figure 4

In the picture above , in the input image we use a stride of (4×4), represented by boxes pink,yellow, purple and green

We then form a 2×2 Max Pooled Feature Map by selecting the maximum value from each of the boxes. This is essentially what pooling is in a nutshell — reducing the dimensionality of the feature maps while preserving the most important features.

Here are some important points

- Pooling reduces the size of the image even further like 75%

- It creates spatial variance and reduces the number of pixels and parameters

- It cuts the noise and retains only important information

As it removes unnecessary information, so avoids a problem called overfitting.

That’s pooling in a nutshell, if you want to learn math then we will be covering pooling in more detail in future blogs.

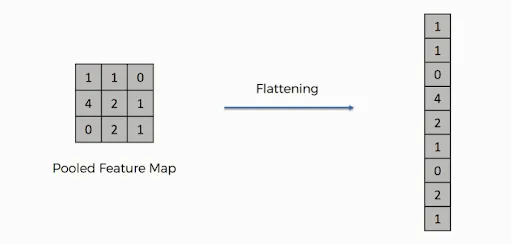

Flattening

In the flattening step, we take the result of the pooled feature map and flatten the values in a column sequentially in a vector. This step is necessary to transform the 2D pooled feature map into a 1D input vector for the fully connected layer.

Figure 5

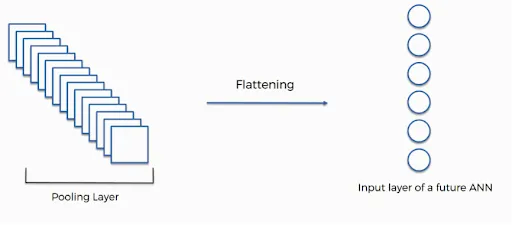

In general, there will be many pooled feature maps created from many feature maps, and these will be concatenated and converted into one very long vector. This vector will then be the input to the fully connected layer for further processing.

That is this flattened layer becomes the INPUT LAYER of the full connected ANN.

Full connection with ANN

In the fully connected layer, we connect an ANN network to the flattened layer. The fully connected layer is also called the hidden layer in ANN, but in CNN, they are called fully connected layers. The below picture shows all the steps in one diagram.

Here is a summary of predicting a feature from an image

The process of predicting from an image using a Convolutional Neural Network involves the following steps:

- The input image is passed to the Convolutional layer, which creates a feature map.

- Rectifier or RELU activation is applied to the output of the Convolution layer.

- The output of RELU is downsampled using Max pooling.

- The output of the pooling layer is flattened.

- The flattened vector is then sent as the input layer of a fully connected neural network.

- The fully connected layer in the neural network has two hidden layers and one output layer.

By following these steps, CNN can recognize and classify images with a high degree of accuracy.

Let’s take an example of an output layer in a neural network that identifies cats or dogs.

This would require a layer with two neurons, where one neuron fires if the image is identified as a dog and the other fires if it’s identified as a cat.

It’s important to note that in classification problems like this, we can have more than one output neuron. Based on the image presented, one of the output neurons gets activated with a certain probability. In contrast, regression problems typically use only one output. To see how each layer works in a CNN, you can visit

3D Visualization of a Convolutional Neural Network

“Here you can draw any number and visualize the behavior layer by layer.”

Training

The training of a CNN is similar to that of an ANN,

Please check out our blog on how ANN is trained here

Here the network is shown millions of images, a loss/cost function determines the error, and backpropagation adjusts the weights. And the process is repeated for multiple epochs to obtain a fully trained network.

It’s important to note that the feature detector is adjusted during training through a process called backpropagation, which involves calculating the gradient of the cost function with respect to the weights and biases of the network and then updating these parameters using an optimization algorithm such as gradient descent or Adam.

This allows the network to learn the most relevant features for the task at hand, such as edges, textures, shapes, and patterns in images, and to classify them accurately. Additionally, data augmentation techniques such as flipping, rotating, scaling, and cropping images can be used to increase the size and diversity of the training set and to reduce overfitting. Overall, CNNs are very powerful and versatile models for image classification, object detection, segmentation, and other computer vision tasks, and they have revolutionized the field in recent years.

SoftMax and Cross-Entropy

SoftMax

is an activation function used at the end of the network to predict probabilities.

For example, in a CNN used for classifying cat and dog images, 2 neurons would be used in the output layer, one for identifying dogs and one for identifying cats.

However, the network outputs probabilities, such as 0.95 for dog and 0.05 for cat, which add up to 1. SoftMax converts the output values to a probability distribution, even if the real values don’t add up to 1.

According to Wikipedia, the SoftMax function is a generalization of the logistic function to multiple dimensions and is often used as the last activation function in neural networks to normalize the output to a probability distribution.

We will cover Softmax and the maths associated with Softmax including how to write your own Softmax algorithm in C++ in future.

But for now, the important thing to note here is that after applying softmax, the components will add up to 1, so that they can be interpreted as probabilities.

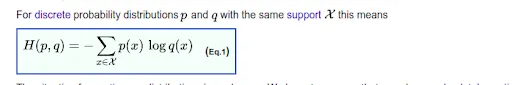

Cross Entropy

The softmax function is often used to normalize the output of a neural network to a probability distribution and after applying the Softmax function, we can use the Cross-Entropy function as a Loss/Cost function.

Which is defined as in Wiki

The cross-entropy function is a popular loss/cost function used to evaluate the performance of a neural network in classification tasks. It has been shown to outperform other loss functions like classification error and mean squared error in many cases due to its logarithmic equation and ability to detect small errors. This can lead to faster convergence in the training process through gradient descent.

Again we will go deeper into cross-entropy in future articles.